Her 6 -year -old told her that he wanted to die. Therefore, Amnesty International was built to save it

The booming world to support mental health that works artificial intelligence is a minefield. From a chat that provides incorrectly incorrect medical advice to artificial intelligence comrades that encourage self -harm, the main headlines are filled with warning tales.

Promise applications like the letter faced. AII and Replika violent reaction to harmful and inappropriate responses, academic studies have sparked warnings.

Two recent studies from Stanford University and Cornell University I found that AI Chatbots often stigmatizes conditions such as dependence on alcohol and schizophrenia, responding “inappropriately” to some common thinking and “encouraging imaginary customers”. They warned of the risk of excessive dependence on artificial intelligence without human supervision.

But in the background, Hafeezah Muhammad, a black woman, builds something different. It does so for painful personal reasons.

“In October 2020, my son, who was six years old, and told me that he wanted to kill himself,” and she was telling her voice still carrying the weight of that moment. “My heart erupted. I did not see it coming.”

At that time, she was responsible for the National Mental Health Company, a person who knows the system from home and abroad. However, she still cannot get her son, who suffers from a disability and returns to Medikid, in care.

“Only 30 % or less than service providers until they accept medicaid,” she explained. “More than 50 % of children in the United States now come from multicultural families, and there have been no solutions to us.”

She says she was terrified, critical and anxious about the stigma that struggles with a child. So he built the thing you did not find.

Today, Mohamed is the founder and CEO of BackPack Healthcare, who is an Maryland -based provider who served more than 4000 patients for children, most of them on Medicaid. It is a company that raises a radical idea that technology can support mental health without replacing the human touch.

Practical artificial intelligence, not an alternative processor

On paper, the backpack appears to be like many other startups. In fact, its approach to artificial intelligence deliberately, with a focus on “boring” but influential applications that enable human therapists.

Connects children’s algorithm with the best possible processor in the first attempt (91 % of patients adhere to their first matches). Artificial intelligence also promotes treatment plans and session notes, giving doctors hours to the loss they used in front of paperwork.

“Our predecessors were spending more than 20 hours a week in administrative tasks,” he explains. “But they are the editors.”

This human approach in the episode is essential to the philosophy of the backpack.

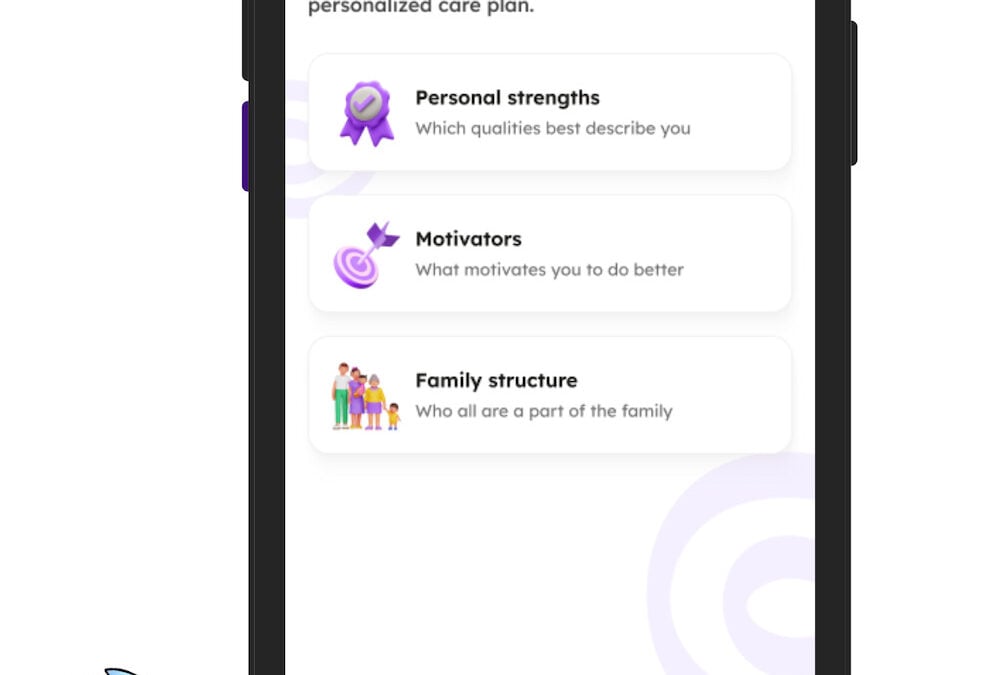

The most important distinctive lies in the backpack in strong moral handrails. The companion of artificial intelligence is the clock throughout the week, “Zipp”, a friendly cartoon character. It is a deliberate option to avoid the dangerous “illusion of sympathy” that was seen at other chat stations.

“We wanted to make it clear that this is a tool, not a human being.”

Investor Nans Rivat of Pace Healthcare Capital calls this “LLM sympathy”, as “users forget that you are talking to a tool at the end of the day.” It indicates cases such as the letter, as the absence of these handrails led to “tragic” results.

Mohamed also insists on the privacy of data. He explains that the patient’s individual data has never been shared without clear and signed approval. However, the company uses unknown and unknown data to report trends, such as how quickly a group of patients are scheduled for care, for its partners.

More importantly, the backpack uses its internal data to improve clinical results. By tracking standards such as anxiety or depression levels, the system can report a patient who may need a higher level of care, ensuring that technology improves children faster.

It is important, that the backpack system also includes an immediate protocol to detect crises. If one of the children has created a phrase indicating the thinking of suicide, Chatbot will immediately respond to the numbers of the hot lines of the crises and instructions that must be contacted 911. At one time, the “immediate distress message” is sent to the human crisis response team in the backpack, which communicates directly with the family.

“We are not trying to replace the therapist,” says rural. “We add a tool that was not present before, with safety in.”

Building the human spine

In addition to its moral technology, the backpack also treats the lack of a national processor. In many cases, therapists, unlike doctors, must, in a traditional payment for expensive supervision hours required to obtain a license.

To combat this, BackPack launched a two -year paid residence program, covering these costs, creating a pipeline of dedicated healers and trainers well. More than 500 people are applied each year, and the program is characterized by an impressive retention rate of 75 %.

In 2021, Dr. Vivic was described. Morthy, the American surgeon at the time, mental health “the issue of public health specified in our time” while referring at the time to the mental health crisis that suffers from young people.

Muhammad does not avoid criticism that artificial intelligence can make things worse.

“Either another person builds this technique without the correct handrails, or I can, as a mother, make sure that it has been properly implemented,” she says.

Her son is now eleven years old, thrived, and works as “the chief innovative child.”

“If we do our work correctly, they will not need us forever,” says Muhammad. “We give them tools now, so they grow into an adult.

[publish_date

https://gizmodo.com/app/uploads/2025/08/Backpack-has-an-AI-care-companion-989×675.jpg