Chatgpt and Claude enter the United States government star-news.press/wp

Two billion dollars Mind They split their way to Capitol Hill: Chevat and Claude.

LLMS models were presented to the federal government this month by its makers, Openai and HotHROPIC, for only one dollar. Partnerships come amid the Trump administration batch to accelerate the adoption of artificial intelligence in the public sector.

By offering models costing billions of dollars for free for free on the 15 largest workers in the country, Openai and HotHROPIC have secured a place in the Legislative Tools Fund – a position that can be proven profitable as soon as free experiences end and workers grow on their products.

While Openai reveals GPT-5, which is what Claims It reaches the performance of the “PhD level”, the release of the Antarbur, Claude Obus 4, who is said to be able to run “long tasks” for seven hours, the question becomes: What does it mean that the government has these capabilities in the tips of its fingers?

At best, LLMS can provide billions of taxpayers annually by simplifying operations and enhancing policy results. But processing sensitive data with products owned by private companies-and relying on incomplete hallucinogenic products, is sometimes risky.

“I am a little worried about just throwing LLMS to workers, and telling them,” says he can use this now, it is very cheap, and doing everything, “says it will not really improve efficiency or event much – and he may provide a set of new risks,” says Mia Hoffman, a fellow of the Georgetown Center for Emerging Security and Technology Technology. “It is clear, LLMS is not God -like systems; it comes with a set of issues.”

Federal artificial intelligence pushed

“The agencies must reduce bureaucratic bottlenecks and redefine the governance of artificial intelligence as an empowerment factor for effective and safe innovation,” wrote the Office of Management and Budget at the White House (OMB). one From notes published in April. These notes are the subject of Biden’s Testament for Federal Publishing for Amnesty International, and to build on President Donald Trump’s executive order: Maintaining American leadership in artificial intelligence.

Management Artificial Intelligence Action PlanWhich was published on July 23, defines other political recommendations, including the “AI Intelligence Purchase Box” for the agents accredited to agencies for use. The plan also states that “all employees whose work can benefit from access to border language models, and the appropriate training for these tools.”

Under the agreements, ChatGPT and Claude are available for agencies, as Claude is expected to reach his extension to the judiciary and members of Congress “awaiting their approval.”

So, how will it be used?

It has not been revealed exactly how it was published, but the artificial intelligence plan is cited possible applications such as “speeding up slow and manual interior operations often, simplifying general interactions, and many others.”

“The rear operations” are probably, “says Lindsay Gorman, the administrative director and first colleague of the Marshall Technology Fund on the Marshall program, says,” The background operations “are probably,” says Lindsay Gorman, the administrative director and first colleague of the Technology Program at the Marshall Fund on the “Marshall” program.

The plan also calls for experimenting with some agencies using artificial intelligence “to improve the provision of services to the public.” This may mean artificial intelligence assistants facing citizens, similar to how companies use Chatbots to serve customer service.

You also expect to be “heavy” thinking models, such as accelerating scientific research. Openai claims O3 and O4-MINI models, which are included in Enterprise Chatgpt, the first that can “think about pictures”. In theory, users can insert graphs or graphics, and models will analyze them during the thinking process before answering. Report from Information He claims that new models can synthesize experience across areas such as nuclear fission or detecting pathogenic factors and then indicate new experiences or ideas.

More common, LLMS is likely to be used to analyze data groups or summarize documents.

“You can imagine the representative of the House of Representatives or a member of the Senate collecting research on certain bills or political ideas to help inform their work,” Gourman says.

Some of this really happens. Federal agencies are published barren How to spread artificial intelligence, which included 2,133 cases of use from January. The Ministry of Justice is already using Chatgpt for things such as creating content, reports based on and scrutiny. In January, it included 241 Amnesty International’s entries – an increase of more than 1500 % over the previous year.

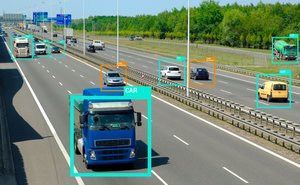

The United States for Migration and Customs (ICE) Running 19 cases of use of artificial intelligence as of January, including the “Approcess Priority Priority Complex”, which uses machine learning to classify the goals of security investigations for the homeland, by appointing them to obtain a degree. ICE claims that this is particularly important in the functions of anti -opium and fentianil, where time is necessary. One can imagine this extensive data collection loaded on LLM for a faster and more detailed analysis.

ICE has also repeatedly reached a network of national powered cameras from artificial intelligence, through local and state law enforcement agencies, without establishing an official contract with the program provider, 404 media I mentioned in May.

Privacy concerns

OMB directs agencies to make sure data collect and keep them by sellers only when “reasonably necessary” for the purpose of the contract. But for the agencies to obtain a value from LLM, their data must be nurtured – which means that most of the uses may fulfill this threshold.

“If you are involved in inquiries in a model, companies will be able to access these queries. How is this to be protected?” Gourman says. “What are the guarantees there that if an employee of the Senate has entered sensitive political information, then this data will be protected – especially from foreign actors?” She adds that many startups lack strong guarantees of government data.

Llms also creates new attack tankers such as “indirect fast injection” and more opportunities to leak data. “New entry points for the infiltrators move faster than politics,” warns.

Accuracy and bias

LLMS can also produce errors and reflect historical biases. The University of Bordeaux 2024 study found that about half of Chatgpt answers to programming questions Incoming Informed information.

While the models It does not actually work like human mindsIt still reflects and sometimes inflating societal biases. For example, a paper Entitled Bias between gender and stereotypes in large language models I found that LLMS is 3-6 times more likely to set stereotypes by sex, such as “nurse” for women and “engineer” for men.

In one real case, Wireless She revealed that in Rotterdam, the Dutch government used a algorithm to discover social welfare fraud that gave greater risk degrees to immigrants, only fathers, women, youth, and non -wise speakers without a good reason. This led to impartial investigations and the benefit of pendants for marginalized groups. After the audit operations found that the system is transparent and discriminatory, it was arrested in 2021.

To mitigate these risks, OMB requires “high-influential artificial intelligence”-systems whose outputs are the main basis for legal or important decisions on civil rights or safety-and to conduct annual risk assessments. Perhaps not surprisingly, high -impact cases are concentrated: the Ministry of Justice and the Ministry of Internal Security constitute only 4 % of agencies, but they represent 45 % of these cases.

This means that LLMS can, in theory, use it as a basis for subordinate decisions that affect civil rights or public health. Georman warns that some automation decisions – especially in the judicial system, should be appealing – will carry “very high” risks.

However, you believe that in the near -term priority will work on some “Kinks” in the functioning of the task support, a period of tension with less risk it considers “wise”.

📬 Subscribe to the daily summary

https://qz.com/cdn-cgi/image/width=300,quality=85,format=auto/https://assets.qz.com/media/GettyImages-1437265379.jpg

2025-08-16 09:12:00